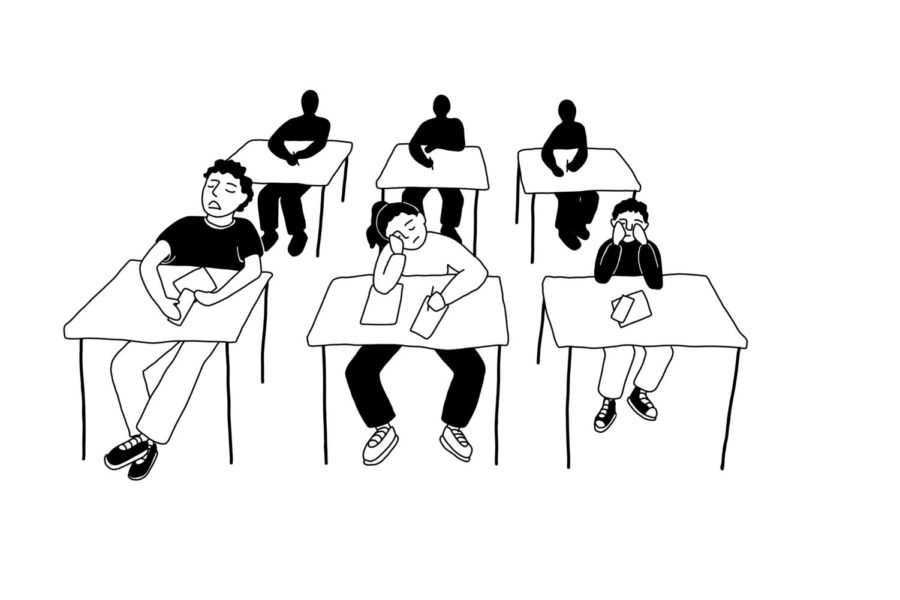

Whenever AI and education are brought up in the same sentence, many people’s minds automatically think “cheating.” A fast-track for students to find answers and avoid monotonous homework, a cheat that prevents students from doing the hard work of research and critical thinking themselves.

But there’s a more pressing issue concerning the use of AI than students and teachers alike should be afraid of: what happens when AI can think like a human?

What will it mean when AI technologies aren’t limited by pre-defined scopes and programs, but can instead think, adapt and reason abstractly and independently? What happens when students not only use ChatGPT as a tool, but as a replacement for their own thinking?

Granted, these kinds of AI technologies are purely theoretical; the only kinds of AI that exist – from ChatGPT to the Netflix recommendation engine – function on pre-programed information and instructions. Called narrow AI (or weak AI), these technologies simulate consciousness but are years from being that capable. Predictions for when AI that thinks at the cognitive abilities of a human will exist (called Strong AI or Artificial General Intelligence (AGI)) vary widely among AI researchers, ranging from 2027 to 2047 and beyond, according to Cornell University.

Considering this timeline, some might argue that this is a distant and irrelevant concern. However, why would so many educators be wary of the technology if it were just a haphazard tool?

When the internet was created and “online” became a reality, humans, too, were resistant towards these advancements that quickly came to restructure how we communicate, and most importantly learn, in the modern world. With the depth of information that the internet freely provided students, the American education system began moving away from teaching students for the purpose of the regurgitation of dates and facts which quickly became accessible online and towards valuing independent critical thinking.

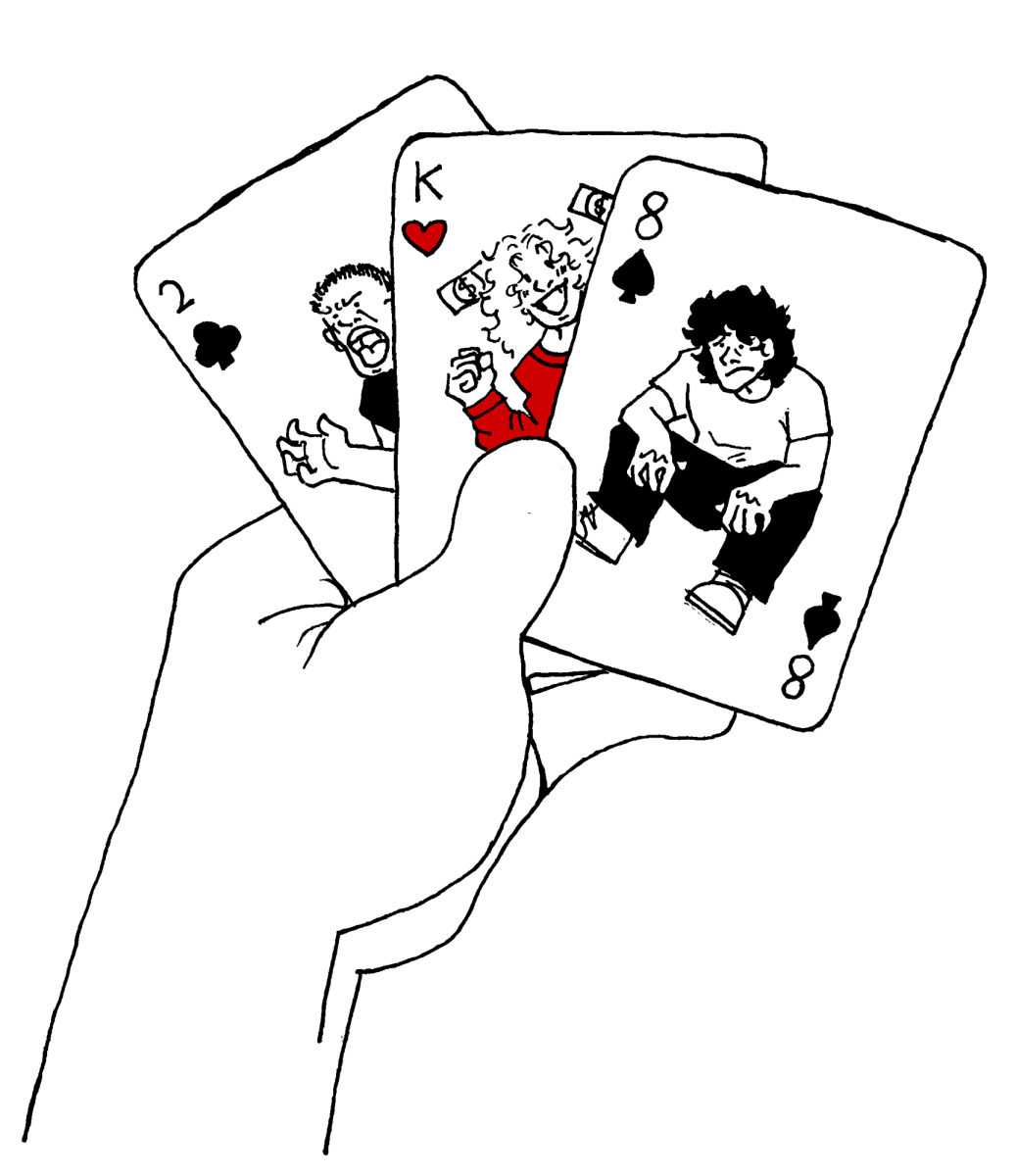

But once AI can not only find that information – much like a Google search – but can also analyze it for us, what are we left to do as students? What makes our thinking more valuable than ChatGPT’s that does the work faster, and maybe even better, than us?

Ultimately, it comes down to the fact that a human brain has something that will forever be more important than an AI’s efficiency and convenience: creativity, and with that, imperfection.

If all we are looking for is the “right” answer, then AI is the obvious solution. However, losing a human mind to a mechanical one means narrowing our collective field of vision before we even see the whole field in the first place. Replacing human thinking with AI’s means losing the potential for the mistakes and imperfection that have led to discoveries like penicillin, which stemmed not from an algorithm but rather curiosity and chance. If we create a predefined box for AI (and subsequently humanity) to think inside of, there will never be an opportunity to think outside of it.

Thus, AI technologies should be taught and incorporated into the classroom, but to enhance rather than replace student thinking. Our education system – and us as students – must come to view AI as a tool rather than a necessity. This technology can be helpful to an extent, but at the cost of, eventually, our own creativity.